|

|

Publications

|

Monoxide: Scale out Blockchains with Asynchronous Consensus Zones

Jiaping Wang,

Hao Wang

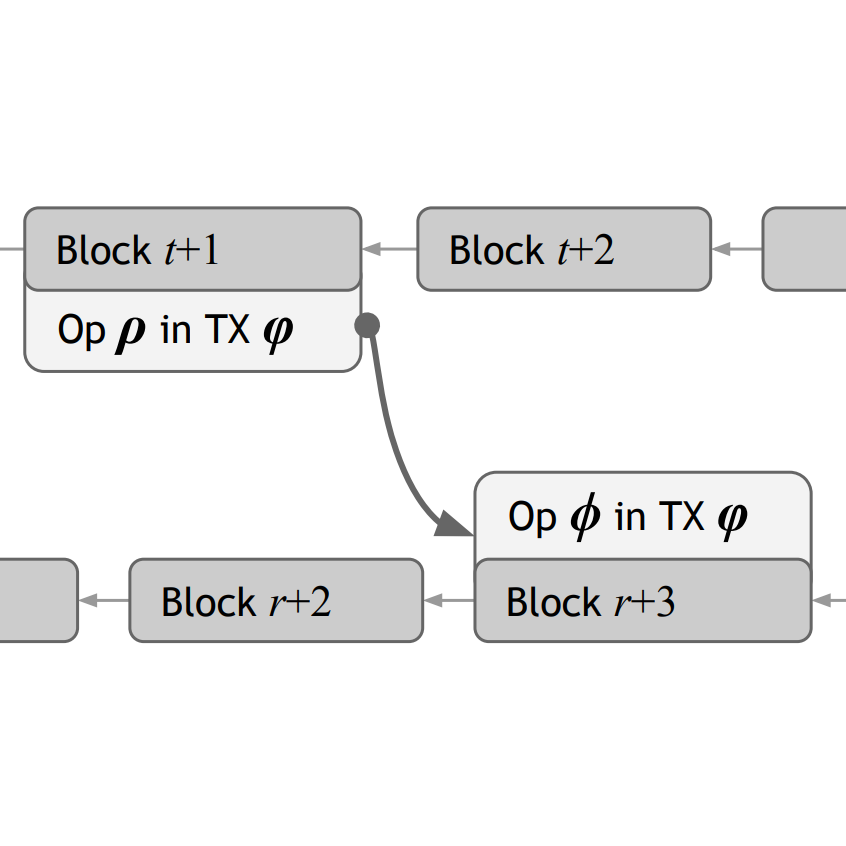

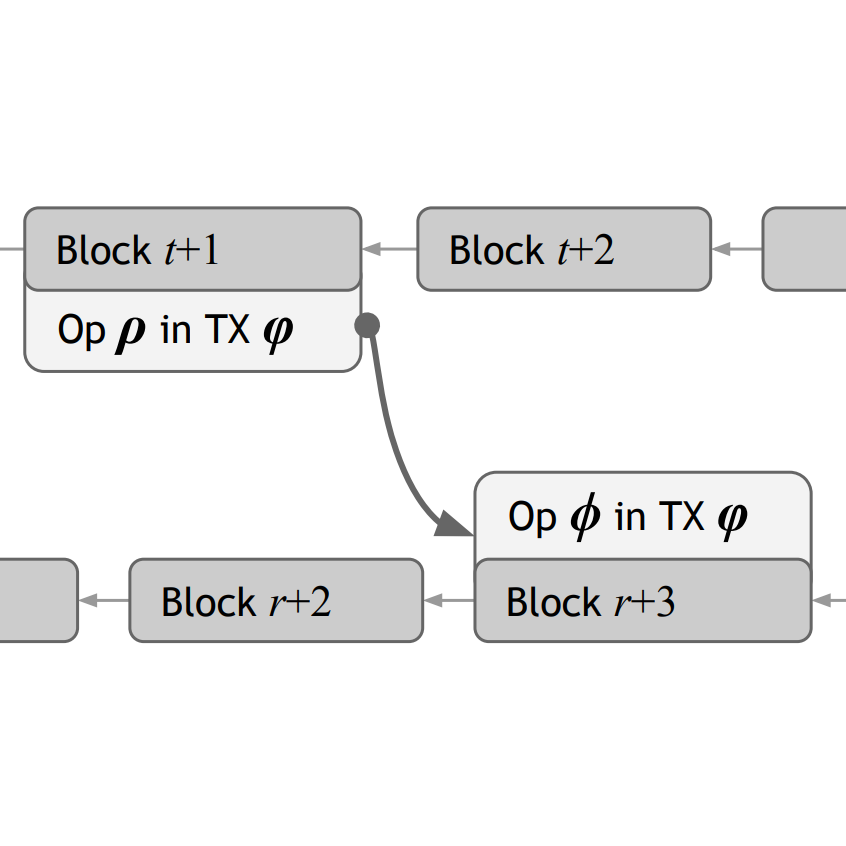

Cryptocurrencies have provided a promising infrastructure for pseudonymous online payments. However, low throughput has significantly hindered the scalability and usability of cryptocurrency systems for increasing numbers of users and transactions. Another obstacle to achieving scalability is that every node is required to duplicate the communication, storage, and state representation of the entire network.

In this paper, we introduce the Asynchronous Consensus Zones, which scales blockchain system linearly without compromising decentralization or security. We achieve this by running multiple independent and parallel instances of single-chain consensus (zones). The consensus happens independently within each zone with minimized communication, which partitions the workload of the entire network and ensures moderate burden for each individual node as network grows. We propose eventual atomicity to ensure transaction atomicity across zones, which guarantees the efficient completion of transaction without the overhead of two-phase commit protocol. We also propose Chu-ko-nu mining to ensure the effective mining power in each zone is at the same level of the entire network, and makes an attack on any individual zone as hard as that on the entire network. Our experimental results show the effectiveness of our work. On a test-bed including 1,200 virtual machines worldwide to support 48,000 nodes, our system deliver 1,000× throughput and 2,000× capacity over Bitcoin and Ethereum network.

NDSI 2019 [ paper ] [ project ] [

bibtex

]

16th USENIX Symposium on Networked Systems Design and Implementation, February 2019, Boston, MA

|

|

Efficient Reflectance Capture Using an Autoencoder

Kaizhang Kang,

Zimin Chen,

Jiaping Wang,

Kun Zhou,

Hongzhi Wu

We propose a novel framework that automatically learns the lighting patterns for efficient reflectance acquisition, as well as how to faithfully reconstruct spatially varying anisotropic BRDFs and local frames from measurements under such patterns. The core of our framework is an asymmetric deep autoencoder, consisting of a nonnegative, linear encoder which directly corresponds to the lighting patterns used in physical acquisition, and a stacked, nonlinear decoder which computationally recovers the BRDF information from captured photographs. The autoencoder is trained with a large amount of synthetic reflectance data, and can adapt to various factors, including the geometry of the setup and the properties of appearance. We demonstrate the effectiveness of our framework on a wide range of physical materials, using as few as 16 ~ 32 lighting patterns, which correspond to 12 ~ 25 seconds of acquisition time. We also validate our results with the ground truth data and captured photographs. Our framework is useful for increasing the efficiency in both novel and existing acquisition setups.

ACM SIGGRAPH 2018 [ paper ] [ video ] [ project ] [

bibtex

]

ACM Transactions on Graphics Volume 37, Number 4, August 2018

|

|

Vector Regression Functions for Texture Compression

Ying Song,

Jiaping Wang,

Liyi Wei,

Wencheng Wang

Raster images are the standard format for texture mapping, but they suffer from limited resolution. Vector graphics are resolution-independent but are less general and more difficult to implement on a GPU. We propose a hybrid representation called vector regression functions (VRFs), which compactly approximate any point-sampled image and support GPU texture mapping, including random access and filtering operations. Unlike standard GPU texture compression, (VRFs) provide a variable-rate encoding in which piecewise smooth regions compress to the square root of the original size. Our key idea is to represent images using the multilayer perceptron, allowing general encoding via regression and efficient decoding via a simple GPU pixel shader. We also propose a content-aware spatial partitioning scheme to reduce the complexity of the neural network model. We demonstrate benefits of our method including its quality, size, and runtime speed.

ACM TOG 2015 [ paper ] [

bibtex

]

ACM Transactions on Graphics Volume 35, Number 1, December 2015

|

|

Global Illumination with Radiance Regression Functions

Peiran Ren, Jiaping Wang, Minmin Gong, Stephen Lin, Xin Tong, Baining Guo

We present radiance regression functions for fast rendering of global illumination

in scenes with dynamic local light sources. A radiance regression function (RRF)

represents a non-linear mapping from local and contextual attributes of surface

points, such as position, viewing direction, and lighting condition, to their indirect

illumination values. The RRF is obtained from precomputed shading samples through

regression analysis, which determines a function that best fits the shading data.

For a given scene, the shading samples are precomputed by an offline renderer.

The key idea behind our approach is to exploit

the nonlinear coherence of the indirect illumination data to make the RRF both compact

and fast to evaluate. We model the RRF as a multilayer acyclic feed-forward neural

network, which provides a close functional approximation of the indirect illumination

and can be effi- ciently evaluated at run time. To effectively model scenes with

spatially variant material properties, we utilize an augmented set of attributes

as input to the neural network RRF to reduce the amount of inference that the network

needs to perform. To handle scenes with greater geometric complexity, we partition

the input space of the RRF model and represent the subspaces with separate, smaller

RRFs that can be evaluated more rapidly. As a result, the RRF model scales well

to increasingly complex scene geometry and material variation. Because of its compactness

and ease of evaluation, the RRF model enables real-time rendering with full global

illumination effects, including changing caustics and multiple-bounce high-frequency

glossy interreflections.

ACM SIGGRAPH 2013 [ paper ] [ video ] [

bibtex

]

ACM Transactions on Graphics Volume 32, Number 4, July 2013

|

|

Interactive chromaticity mapping for multispectral images

Yanxiang Lan, Jiaping Wang, Stephen Lin, Minmin Gong, Xin Tong, Baining Guo

Multispectral images record detailed color spectra at each image pixel. To display a multispectral image on conventional output devices, a chromaticity mapping function is needed to map the spectral vector of each pixel to the displayable three dimensional color space. In this paper, we present an interactive method for locally adjusting the chromaticity mapping of a multispectral image. The user specifies edits to the chromaticity mapping via a sparse set of strokes at selected image locations and wavelengths, then our method automatically propagates the edits to the rest of the multispectral image. The key idea of our approach is to factorize the multispectral image into a component that indicates spatial coherence between different pixels, and one that describes spectral coherence between different wavelengths. Based on this factorized representation, a two-step algorithm is developed to efficiently propagate the edits in the spatial and spectral domains separately. The method presented provides photographers with efficient control over color appearance and scene details in a manner not possible with conventional color image editing. We demonstrate the use of interactive chromaticity mapping in the applications of color stylization to emulate the appearance of photographic films, enhancement of image details, and manipulation of different light transport effects.

[ paper ][

bibtex ]

The Visual Computer volume 29, May 2013

|

|

Pocket Reflectometry

Peiran Ren, Jiaping Wang, John Snyder, Xin Tong, Baining Guo

We present a simple, fast solution for reflectance acquisition using tools that

fit into a pocket. Our method captures video of a flat target surface from a fixed

video camera lit by a hand-held, moving, linear light source. After processing,

we obtain an SVBRDF. We introduce a BRDF chart, analogous to a color "checker" chart,

which arranges a set of known-BRDF reference tiles over a small card. A sequence

of light responses from the chart tiles as well as from points on the target is

captured and matched to reconstruct the target's appearance.

We develop a new algorithm for BRDF reconstruction which works directly on these

LDR responses, without knowing the light or camera position, or acquiring HDR lighting.

It compensates for spatial variation caused by the local (finite distance) camera

and light position by warping responses over time to align them to a specular reference.

After alignment, we find an optimal linear combination of the Lambertian and purely

specular reference responses to match each target point's response. The same weights

are then applied to the corresponding (known) reference BRDFs to reconstruct the

target point's BRDF. We extend the basic algorithm to also recover varying surface

normals by adding two spherical caps for diffuse and specular references to the

BRDF chart.

ACM SIGGRAPH 2011 [ paper ] [ video ] [

bibtex ]

ACM Transactions on Graphics Volume 30, Number 4, July 2011

|

|

Condenser-Based Instant Reflectometry

Yanxiang Lan, Yue Dong, Jiaping Wang, Xin Tong, Baining Guo

We present a technique for rapid capture of high quality bidirectional reflection

distribution functions(BRDFs) of surface points. Our method represents the BRDF

at each point by a generalized microfacet model with tabulated normal distribution

function (NDF) and assumes that the BRDF is symmetrical. A compact and light-weight

reflectometry apparatus is developed for capturing reflectance data from each surface

point within one second.

The device consists of a pair of condenser lenses, a video camera, and six LED light

sources. During capture, the reflected rays from a surface point lit by a LED lighting

are refracted by a condenser lenses and efficiently collected by the camera CCD.

Taking advantage of BRDF symmetry, our reflectometry apparatus provides an efficient

optical design to improve the measurement quality. We also propose a model fitting

algorithm for reconstructing the generalized microfacet model from the sparse BRDF

slices captured from a material surface point. Our new algorithm addresses the measurement

errors and generates more accurate results than previous work.

Our technique provides a practical and efficient solution for BRDF acquisition,

especially for materials with anisotropic reflectance. We test the accuracy of our

approach by comparing our results with ground truth. We demonstrate the efficiency

of our reflectometry by measuring materials with different degrees of specularity,

values of Fresnel factor, and angular variation.

Pacific Graphics 2010 [ paper ] [

bibtex ]

Computer Graphics Forum, Volume 29, Number 7, Oct 2010

|

|

Manifold Bootstrapping for SVBRDF Capture

Yue Dong, Jiaping Wang, Xin Tong, John Snyder, Yanxiang Lan, Moshe Ben-Ezra, Baining Guo

Manifold bootstrapping is a new method for data-driven modeling of real-world, spatially-varying

reflectance, based on the idea that reflectance over a given material sample forms

a low-dimensional manifold. It provides a high-resolution result in both the spatial

and angular domains by decomposing reflectance measurement into two lower-dimensional

phases. The first acquires representatives of high angular dimension but sampled

sparsely over the surface, while the second acquires keys of low angular dimension

but sampled densely over the surface.

We develop a hand-held, high-speed BRDF capturing device for phase one measurements.

A condenser-based optical setup collects a dense hemisphere of rays emanating from

a single point on the target sample as it is manually scanned over it, yielding

10 BRDF point measurements per second. Lighting directions from 6 LEDs are applied

at each measurement; these are amplified to a full 4D BRDF using the general (NDF-tabulated)

microfacet model. The second phase captures N=20-200 images of the entire sample

from a fixed view and lit by a varying area source. We show that the resulting N-dimensional

keys capture much of the distance information in the original BRDF space, so that

they effectively discriminate among representatives, though they lack sufficient

angular detail to reconstruct the SVBRDF by themselves. At each surface position,

a local linear combination of a small number of neighboring representatives is computed

to match each key, yielding a highresolution SVBRDF. A quick capture session (10-20

minutes) on simple devices yields results showing sharp and anisotropic specularity

and rich spatial detail.

ACM SIGGRAPH 2010 [ paper ] [ video ] [

bibtex ]

ACM Transactions on Graphics Volume 29, Number 4, July 2010

|

|

Fabricating Spatially-Varying Subsurface Scattering

Yue Dong, Jiaping Wang,

Fabio Pellacini, Xin Tong, Baining Guo

Many real world surfaces exhibit translucent appearance due to subsurface scattering.

Although various methods exists to measure, edit and render subsurface scattering

effects, no solution exists for manufacturing physical objects with desired translucent

appearance. In this paper, we present a complete solution for fabricating a material

volume with a desired surface BSSRDF. We stack layers from a fixed set of manufacturing

materials whose thickness is varied spatially to reproduce the heterogeneity of

the input BSSRDF. Given an input BSSRDF and the optical properties of the manufacturing

materials, our system efficiently determines the optimal order and thickness of

the layers. We demonstrate our approach

by printing a variety of homogenous and heterogenous BSSRDFs using two hardware

setups: a milling machine and a 3D printer.

ACM SIGGRAPH 2010 [ paper ] [ video ] [

bibtex ]

ACM Transactions on Graphics Volume 29, Number 4, July 2010

|

|

Real-time Rendering of Heterogeneous Translucent Objects

with Arbitrary Shapes

Yajun Wang, Jiaping Wang,

Nicolas Holzschuch,

Kartic Subr,

Jun-Hai

Yong, Baining

Guo

We present a real-time algorithm for rendering translucent objects of arbitrary

shapes. We approximate the scattering

of light inside the objects using the diffusion equation, which we solve on-the-fly

using the GPU. Our algorithm is general enough to handle arbitrary geometry, heterogeneous

materials, deformable objects and modifications of lighting, all in real-time. In

a pre-processing step, we discretize the object into a regular 4-connected structure

(QuadGraph). Due to its regular connectivity, this structure is easily packed into

a texture and stored on the GPU. At runtime, we use the QuadGraph stored on the

GPU to solve the diffusion equation, in real-time, taking into account the varying

input conditions: Incoming light, object material and geometry. We handle deformable

objects, provided the deformation does not change the topological structure of the

objects.

Eurographics 2010 [ paper ] [ video ] [ bibtex ]

Computer Graphics Forum, Volume 29, Number 2, May 2010

|

|

All-Frequency Rendering of Dynamic, Spatially-Varying Reflectance

Jiaping Wang, Peiran Ren, Minmin Gong, John Snyder, Baining Guo

We describe a technique for real-time rendering of dynamic, spatially-varying BRDFs

in static scenes with all-frequency shadows from environmental and point lights.

The 6D SVBRDF is represented with a general microfacet model and spherical lobes

fit to its 4D spatially-varying normal distribution function (SVNDF). A sum of spherical

Gaussians (SGs) provides an accurate approximation with a small number of lobes.

Parametric BRDFs are fit on-the-fly using simple analytic expressions; measured

BRDFs are fit as a preprocess using nonlinear optimization. Our BRDF representation

is compact, allows detailed textures, is closed under products and rotations, and

supports reflectance of arbitrarily high specularity. At run-time, SGs representing

the NDF are warped to align the half-angle vector to the lighting direction and

multiplied by the microfacet shadowing and Fresnel factors. This yields the relevant

2D view slice on-the-fly at each pixel, still represented in the SG basis. We account

for macro-scale shadowing using a new, nonlinear visibility representation based

on spherical signed distance functions (SSDFs). SSDFs allow per-pixel interpolation

of high-frequency visibility without ghosting and can be multiplied by the BRDF

and lighting efficiently on the GPU.

Proceedings of ACM SIGGRAPH Asia 2009 [ paper ] [ video ] [ bibtex ]

ACM Transactions on Graphics,

Volume 28, Number 5, Aug 2009

|

|

The Dual-microfacet Model for Capturing Thin Transparent Slabs

Qiang Dai, Jiaping Wang,

Yiming Liu, John Snyder, Enhua Wu, Baining Guo

We present a new model, called the dual-microfacet, for those materials such as

paper and plastic formed by a thin, transparent slab lying between two surfaces

of spatially varying roughness. Light transmission through the slab is represented

by a microfacet-based BTDF which tabulates the microfacet’s normal distribution

(NDF) as a function of surface location. Though the material is bounded by two surfaces

of different roughness, we approximate light transmission through it by a virtual

slab determined by a single spatially-varying NDF. This enables efficient capturing

of spatially variant transparent slices. We describe a device for measuring this

model over a flat sample by shining light from a CRT behind it and capturing a sequence

of images from a single view. Our method captures both angular and spatial variation

in the BTDF and provides a good match to measured materials.

Proceedings of Pacific Graphics 2009, Distinguished Paper Award [ paper ] [ bibtex ]

Computer Graphics Forum, Volume 27, Number 3

|

|

Edit Propagation on Bidirectional Texture Functions

Kun Xu ,

Jiaping Wang, Xin Tong,

Shi-Min Hu,

Baining Guo

We propose an efficient method for editing bidirectional texture functions (BTFs)

based on edit propagation scheme. In our approach, users specify sparse edits on

a certain slice of BTF. An edit propagation scheme is then applied to propagate

edits to the whole BTF data. The consistency of the BTF data is maintained by propagating

similar edits to points with similar underlying geometry/reflectance. For this purpose,

we propose to use view independent features including normals and re?ectance features

reconstructed from each view to guide the propagation process. We also propose an

adaptive sampling scheme for speeding up the propagation process. Since our method

needn’t any accurate geometry and re?ectance information, it allows users to edit

complex BTFs with interactive feedback.

Proceedings of Pacific Graphics 2009 [ paper ] [ bibtex ]

Computer Graphics Forum, Volume 27, Number 3

|

|

Texture Splicing

Yiming Liu, Jiaping Wang, Su Xue,

Xin Tong,

Sing Bing

Kang, Baining

Guo

We propose a new texture editing operation called texture splicing. For this operation,

we regard a texture as having repetitive elements (textons) seamlessly distributed

in a particular pattern. Taking two textures as input, texture splicing generates

a new texture by selecting the texton appearance from one texture and distribution

from the other. Texture splicing involves self-similarity search to extract the

distribution, distribution warping, contextdependent warping, and finally, texture

refinement to preserve overall appearance. We show a variety of results to illustrate

this operation.

Proceedings of Pacific Graphics 2009 [ paper ] [ video ] [ bibtex ]

Computer Graphics Forum, Volume 27, Number 3

|

|

Kernel Nyström Method for Light Transport

Jiaping Wang, Yue Dong, Xin Tong,

Zhouchen Lin,

Baining Guo

We propose a kernel Nystrom method for reconstructing the light transport matrix

from a relatively small number of acquired images. Our work is based on the generalized

Nystrom method for low rank matrices. We introduce the light transport kernel and

incorporate it into the Nystrom method to exploit the nonlinear coherence of the

light transport matrix. We also develop an adaptive scheme for efficiently capturing

the sparsely sampled images from the scene. Our experiments indicate that the kernel

Nystrom method can achieve good reconstruction of the light transport matrix with

a few hundred images and produce high quality relighting results. The kernel Nystrom

method is effective for modeling scenes with complex lighting effects and occlusions

which have been challenging for existing techniques.

Proceedings of ACM SIGGRAPH 2009 [ paper ] [ video ] [ bibtex ]

ACM Transactions on Graphics, Volume 28, Number 3, Aug 2009.

|

|

Modeling Anisotropic Surface Reflectance with Example-based Microfacet

Synthesis

Jiaping Wang, Shuang Zhao, Xin Tong, John Snyder, Baining Guo

We present a new technique for the visual modeling of spatially varying anisotropic

reflectance using data captured from a single view. Reflectance is represented using

a microfacet-based BRDF which tabulates the facets' normal distribution (NDF) as

a function of surface location. Data from a single view provides a 2D slice of the

4D BRDF at each surface point from which we fit a partial NDF. The fitted NDF is

partial because the single view direction coupled with the set of light directions

covers only a portion of the "half-angle" hemisphere. We complete the

NDF at each point by applying a novel variant of texture synthesis using similar,

overlapping partial NDFs from other points. Our similarity measure allows azimuthal

rotation of partial NDFs, under the assumption that reflectance is spatially redundant

but the local frame may be arbitrarily oriented. Our system includes a simple acquisition

device that collects images over a 2D set of light directions by scanning a linear

array of LEDs over a flat sample. Results demonstrate that our approach preserves

spatial and directional BRDF details and generates a visually compelling match to

measured materials.

Proceedings of ACM SIGGRAPH 2008 [ paper ] [ video ] [ bibtex ] [ Reflectance Data ]

ACM Transactions on Graphics, Volume 27, Number 3, Aug 2008.

|

|

An LED-only BRDF Measurement Device

Moshe Ben-Ezra, Jiaping Wang, Bennett Wilburn, Xiaoyang Li and Le Ma

Light Emitting Diodes (LEDs) can be used as light detectors and as light emitters.

In this paper, we present a novel BRDF measurement device consisting exclusively

of LEDs. Our design can acquire BRDFs over a full hemisphere, or even a full sphere

(for the bidirectional transmittance distribution function BTDF) , and can also

measure a (partial) multi-spectral BRDF. Because we use no cameras, projectors,

or even mirrors, our design does not suffer from occlusion problems. It is fast,

significantly simpler, and more compact than existing BRDF measurement designs.

Computer Vision and Pattern Recognition (CVPR), June 2008. [ paper ] [ bibtex ]

|

|

Image-based Material Weathering

Su Xue, Jiaping Wang, Xin Tong,

Qionghai Dai, Baining Guo

We present a technique for modeling and editing the weathering effects of an object

in a single image with appearance manifolds. In our approach, we formulate the input

image as the product of reflectance and illuminance. An iterative method is then

developed to construct the appearance manifold in color space (i.e., Lab space)

for modeling the reflectance variations caused by weathering. Based on the appearance

manifold, we propose a statistical method to robustly decompose reflectance and

illuminance for each pixel. For editing, we introduce a "pixel-walking"

scheme to modify the pixel reflectance according to its position on the manifold,

by which the detailed reflectance variations are well preserved. We illustrate our

technique in various applications, including weathering transfer between two images

that is first enabled by our technique.

Eurographics 2008 [ paper ] [ video ] [ bibtex ]

Computer Graphics Forum Volume 27 Issue 2, Apr 2008.

|

|

Modeling and Rendering Heterogeneous Translucent Materials using

Diffusion Equation

Jiaping Wang, Shuang Zhao, Xin Tong, Stephen Lin, Zhouchen Lin, Yue

Dong, Baining

Guo, Heung-Yeung

Shum

We propose techniques for modeling and rendering of heterogeneous translucent materials

that enable acquisition from measured samples, interactive editing of material attributes,

and real-time rendering. The materials are assumed to be optically dense such that

multiple scattering can be approximated by a diffusion process described by the

diffusion equation. For modeling heterogeneous materials, we present an algorithm

for acquiring material properties from appearance measurements by solving an inverse

diffusion problem. Our modeling algorithm incorporates a regularizer to handle the

ill-conditioned inverse problem, an adjoint method to dramatically reduce the computational

cost, and a hierarchical GPU implementation for further speedup. To display an object

with known material properties, we present an algorithm that performs rendering

by solving the diffusion equation with the boundary condition defined by the given

illumination environment. This algorithm is centered around object representation

by a polygrid, a grid with regular connectivity and an

irregular shape, which facilitates the solution of the diffusion equation in arbitrary

volumes. Because of the regular connectivity, our rendering algorithm can be implemented

on the GPU for real-time performance. We demonstrate our techniques by capturing

materials from physical samples and performing real-time rendering and editing with

these materials.

ACM Transaction on Graphics, Vol.27, Issue 1, 2008. (ACM SIGGRAPH 2007 Referred

to) [

paper ] [ video ] [ bibtex ]

|

|

Spherical Harmonics Scaling

Jiaping Wang, Kun Xu, Kun Zhou, Stephen Lin, Shimin Hu, Baining Guo

We present an new SH operation, called spherical harmonics scaling,

to shrink or expand a spherical function in frequency domain. We show that this

problem can be elegantly formulated as a linear transformation of SH projections,

which is efficient to compute and easy to implement on a GPU. Spherical harmonics

scaling is particularly useful for extrapolating visibility and radiance functions

at a sample point to points closer to or farther from an occluder or light source.

With SH scaling, we present applications to lowfrequency shadowing for general deformable

object, and to efficient approximation of spherical irradiance functions within

a mid-range illumination environment.

Pacific Conference on Computer Graphics and Applications, Oct 2006. [ paper ] [ video ] [ bibtex ]

The Visual Computer, Volume 22, Sept 2006.

|

|

Appearance Manifolds for Modeling Time-Variant Appearance of Materials

Jiaping Wang, Xin Tong, Stephen Lin, Minghao Pan, Chao Wang, Hujun Bao, Baining Guo and Heung-Yeung Shum

We present a visual simulation technique called appearance manifolds

for modeling the time-variant surface appearance of a material from data captured

at a single instant in time. In modeling timevariant appearance, our method takes

advantage of the key observation that concurrent variations in appearance over a

surface represent different degrees of weathering. By reorganizing these various

appearances in a manner that reveals their relative order with respect to weathering

degree, our method infers spatial and temporal appearance properties of the material’s

weathering process that can be used to convincingly generate its weathered appearance

at different points in time. Results with natural non-linear reflectance variations

are demonstrated in applications such as visual simulation of weathering on 3D models,

increasing and decreasing the weathering of real objects, and material transfer

with weathering effects.

Proceedings of ACM SIGGRAPH, Aug 2006. [ paper ] [ video ] [ slides ] [ bibtex ]

ACM Transactions on Graphics, Volume25, Issue 3, July 2006.

|

|

Capturing and Rendering Geometry Details for BTF-mapped Surfaces

Jiaping Wang, Xin Tong,

John Snyder,

Yanyun Chen,

Baining Guo and

Heung-Yeung Shum

Bidirectional texture functions or BTFs accurately model reflectance variation at

a fine (meso-) scale as a function of lighting and viewing direction. BTFs also

capture view-dependent visibility variation, also called masking or parallax, but

only within surface contours. Mesostructure detail is neglected at silhouettes,

so BTF-mapped objects retain the coarse shape of the underlying model.

We augment BTF rendering to obtain approximate mesoscale silhouettes. Our new representation,

the 4D mesostructure distance function (MDF), tabulates

the displacement from a reference frame where a ray first intersects the meso-scale

geometry beneath, as a function of ray direction and ray position along that reference

plane. Given an MDF, the mesostructure silhouette can be rendered with a per-pixel

depth peeling process on graphics hardware, while shading and local parallax is

handled by the BTF. Our approach allows realtime rendering, handles complex, non-height-field

mesostructure, requires that no additional geometry to be sent to the rasterizer

other than the mesh triangles, is more compact than textured visibility representations

used previously, and for the first time can be easily measured from physical samples.

We also adapt the algorithm to capture detailed shadows cast both by and onto BTF-mapped

surfaces.We demonstrate the efficiency of our algorithm on a variety of BTF data,

including real data acquired using our BTF-MDF measurement

Pacific Conference on Computer Graphics and Applications, Oct 2005. [ paper ] [ bibtex ]

The Visual Computer, Volume 21, Sept 2005.

|

|

Modeling and Rendering of Quasi-Homogeneous Materials

Xin Tong, Jiaping Wang, Stephen Lin, Baining Guo and Heung-Yeung Shum

Many translucent materials consist of evenly-distributed heterogeneous elements

which produce a complex appearance under different lighting and viewing directions.

For these quasi-homogeneous materials, existing techniques

do not address how to acquire their material representations from physical samples

in a way that allows arbitrary geometry models to be rendered with these materials.

We propose a model for such materials that can be readily acquired from physical

samples. This material model can be applied to geometric models of arbitrary shapes,

and the resulting objects can be efficiently rendered without expensive subsurface

light transport simulation.

In developing a material model with these attributes, we capitalize on a key observation

about the subsurface scattering characteristics of quasi-homogeneous materials at

different scales. Locally, the non-uniformity of these materials leads to inhomogeneous

subsurface scattering. For subsurface scattering on a global scale, we show that

a lengthy photon path through an even distribution of heterogeneous elements statistically

resembles scattering in a homogeneous medium. This observation allows us to represent

and measure the global light transport within quasi-homogeneous materials as well

as the transfer of light into and out of a material volume through surface mesostructures.

We demonstrate our technique with results for several challenging materials that

exhibit sophisticated appearance features such as transmission of back illumination

through surface mesostructures.

Proceedings of ACM SIGGRAPH, Aug 2005. [ paper ] [ bibtex ]

ACM Transactions on Graphics, Volume24, Issue 3, July 2005.

|

|

Shell Texture Functions

Xin Tong, Yanyun Chen,

Jiaping Wang,

Stephen Lin,

Baining Guo and

Heung-Yeung Shum

We propose a texture function for realistic modeling and efficient rendering of

materials that exhibit surface mesostructures, translucency and volumetric texture

variations. The appearance of such complex materials for dynamic lighting and viewing

directions is expensive to calculate and requires an impractical amount of storage

to precompute. To handle this problem, our method models an object as a shell layer,

formed by texture synthesis of a volumetric material sample, and a homogeneous inner

core. To facilitate computation of surface radiance from the shell layer, we introduce

the shell texture function (STF) which describes voxel

irradiance fields based on precomputed fine-level light interactions such as shadowing

by surface Mesostructures and scattering of photons inside the object. Together

with a diffusion approximation of homogeneous inner core radiance, the STF leads

to fast and detailed renderings of complex materials by raytracing.

Proceedings of ACM SIGGRAPH, Aug 2004. [ paper ] [ bibtex ]

ACM Transactions on Graphics Volume23, Issue 3, Aug 2004.

|

Toys in the Old Site

|

![]() )

)